This project represents the 2009 iteration of my primary research goal: to design and build advanced intelligent machines (artificial general intelligence, or AGI) with mental capabilities similar to the mammalian brain. The Sapience architecture is described in detail here and in these slides. For very brief descriptions, see the 2-page papers here and here.

Note that this project is currently inactive (see update at the bottom).

I believe the goal of AGI is becoming increasingly attainable because:

This project was originally inspired by my motor control evolution project, an example of a simulated entity learning complex motor control with very little instruction from the human programmer. That led me to study other forms of machine learning, especially those that require little help from a human teacher, i.e. reinforcement learning. My MS thesis work (Verve) was basically an initial AGI prototype implementation which was limited to just a few sensors and effectors.

Generally, reinforcement learning is a great framework for designing intelligent machines which must learn from trial and error. However, it is only as effective as the machine's representation of the world. Thus, most of my research effort since 2006 has been focused on engineering an effective context representation, the internal representation of the outside world. In the brain this corresponds to the cerebral cortex. The system described here combines these two important aspects (a learned internal representation and reinforcement learning) with several other components in a brain-inspired architecture.

My research strategy here includes various subtasks, including:

Some of my (very rough) early notes and plans are documented here:

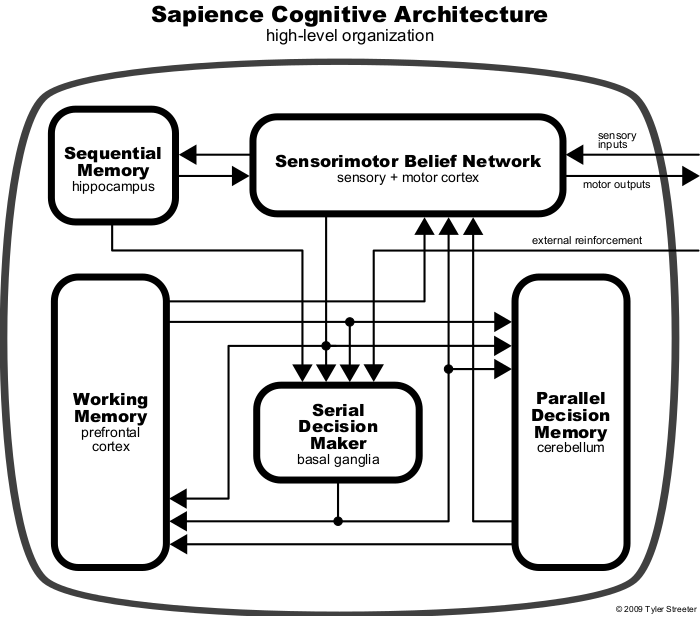

I eventually decided to focus on six brain regions, each represented by a separate component: sensory cortex, motor cortex, prefrontal cortex, hippocampus, basal ganglia, and cerebellum. (Note: later I began treating sensory and motor cortex as a single region, resulting in the final 5-component architecture.) These are described in the first poster below. The second poster goes into more detail about the sensory/motor cortex model.

The architecture comprises the five components depicted in the following diagram. The internals of each component are shown in separate diagrams. For further details, see the documents linked elsewhere on this page.

All components were implemented in a C++ library (with Python bindings) to be linked with any particular application.

The API was very simple: create a Brain, update a Brain, or delete a Brain. Brain creation involved specifying a number of sensory and motor modalities, along with their data types (binary, discrete, or real) and dimensionalities, plus an option for "brain size" which determined e.g. the internal resolution of the nodes in the Sensorimotor Belief Network. There were also options for thread-based parallelization and SIMD instruction support. Brain updates involved a set of straightforward functions for refreshing inputs (including reinforcement signals) and retrieving outputs at regular intervals. A later version of the API added a function to toggle wake/sleep mode (wake mode for data gathering, and sleep mode for learning from stored data).

Each Brain instance generated a log file:

One implementation detail I'd like to mention here involves the use of topographic maps. As the Sensorimotor Belief Network assumed a hierarchical structure to help subdivide the data space, it required a sense of similarity between data dimensions to know how to partition them. I really didn't want to make any assumptions about how data dimensions were arranged... i.e. I wanted to treat all inputs/outputs as just a big bundle of wires. (Note that there have been experiments on live animals [frogs, I think] where an eyeball is unplugged, inverted, and plugged back in. Amazingly, the visual system automatically learns to rewire everything back to a functional state.)

I solved this problem by adding a topographic mapping layer between the raw data and the first layer of the belief network. This transformed things so that any given pair of data dimensions conveying similar data would be placed near each other on the output side (similar to how nearby pixels in an image tend to represent correlated data, but here we extend this principle to all data types and even mixtures of data modalities). The transformed data dimensions were then ready to be subdivided into groups to be passed along to the hierarchical Sensorimotor Belief Network. This all happened automatically, freeing the user from having to specify any structure among the input/output data, which vastly simplified the API.

To inspect various internal aspects of a Sapience Brain in real time, I also implemented a tool that I called the Sapience Probe (also called the Debugger in some of the screenshots below). The Probe could take a reference to any active Brain and query all kinds of things: current raw input/output data, current internal activation states, various key one-dimensional quantities (like reinforcement/value signals), performance profiling info, etc.

The Sapience Brain and Probe were initially structured as a client and server, where any Brain could act as a server, and a Probe running on a separate machine could connect and start the inspection process. Later I simplifed it to be just a separate library that could be linked with the main application (so an application would link the sapience lib and, optionally, the sapience-probe lib), and the Brain reference would just be passed to the Probe within the same application. (I made this change only because I ended up doing all my tests on the same machine anyway, and the client/server approach just added latency and programming complexity.)

Data could be viewed in several ways. There were simple real-time plots for one-dimensional values, which looked like some sort of medical monitor. These were quite helpful and interesting to watch, especially when interacting with a learning system in real-time and watching plots change in response:

There were also visualizations of sensory and motor data modalities, including both the raw data and the internal representations. Here are two examples. The left image shows an early version (which looks a bit sheared here because it was actually a 3D view being inspected from an odd angle). The right image shows a later version, where the modalities are now placed in a flat circular arrangement, which could be inspected more closely by zooming into the various nodes. In both cases, note that the bottom layer includes both actual and reconstructed raw data: the system is continually sending real data up the hierarchy and predictions back down.

Note the bright green meters shown around each node in the hierarchy. This represented the current degree of surprise (KL divergence of posterior from prior) measured by comparing bottom-up and top-down signals. This would start out as a complete circle (representing complete surprise) and gradually diminish as the model learned to represent the data domain.

Here are more examples with vision data, this time zoomed into the internal representations within each node of the perceptual hierarchy:

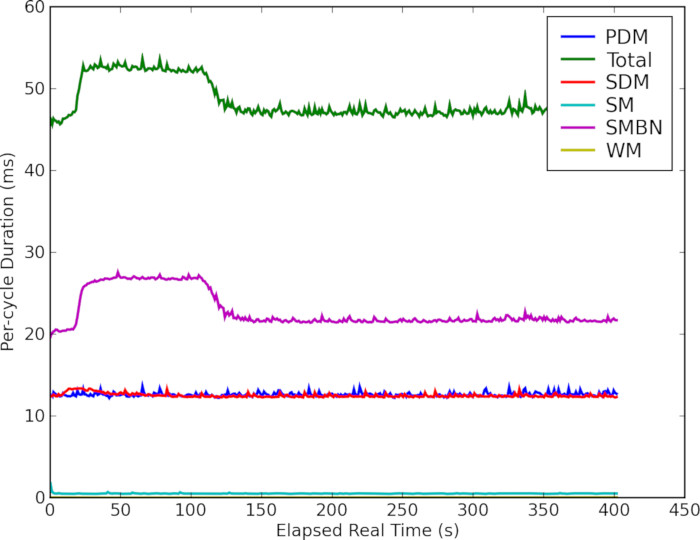

Data could be exported for later processing. For example, runtime performance of each component could be measured, exported, and plotted:

Not shown here are visualizations of other components. For example, the contents of the memory cells in the Working Memory component could be displayed as an array. Overall, it was possible to jump between each component to see how it was learning/responding to the current situation. It really did feel like using a debugger (in an IDE), but for a simulated brain instead of a normal program.

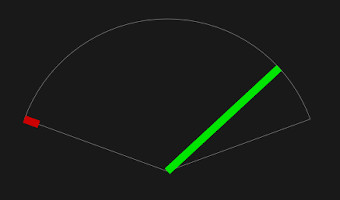

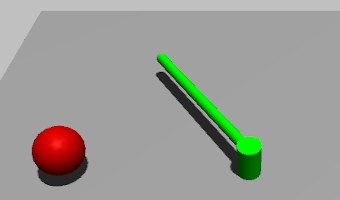

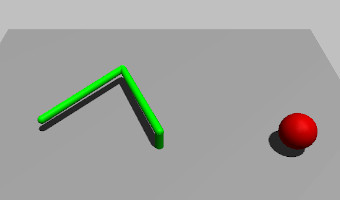

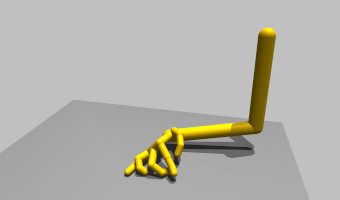

I made a few simulation environments for experiments involving sensory, motor, and reinforcement signals. The ones shown here include various types of embodied arms, ranging from a simple line segment to a human-like arm and hand:

Below you can see the humanoid arm with added visualizations for its 32x32 vision and binary touch sensor array. This environment could be used for a variety of tasks (i.e. reinforcement signals), but generally I wanted to focus on curiosity here, i.e. emergent, self-directed tasks in an open-ended, playground-like environment.

Note: the motor outputs are described here as binary, i.e. applying either zero or some fixed torque at a joint. The document linked here also mentions using "force-limited velocity constraints" (FLVCs), which would use two real values per joint (one to set a desired velocity, and another to set a maximum force output). My original plan was to use FLVCs, but I later switched to binary motors. I made this change because I wanted to keep the raw input/output data as simple as possible (i.e. not requiring a separate control system, even a very simple one like FLVCs). I generally wanted the system to learn any useful transformations directly from the data without any extra help. So, if a FLVC or PID is actually helpful, it would need to construct its own form of these mechanisms internally.

The following images show the Probe connected to Brains driving different versions of the 3D 2-DOF arm: one showing joint angle and goal position sensors, one showing joint angle and vision (pixel array) inputs, and one showing the two motor outputs (labeled "elbow" and "shoulder"):

Finally, here is an example of the Probe connected to the 3D 2-DOF arm (zoomed in here on the proprioception/joint angle modality) with the simulation running in the background:

I've always found biological inspiration to be generally helpful for AI research, especially in the form of broad principles and constraints to help narrow the search space. But after finishing the initial version of Sapience in 2009 (both architecture and software implementation), I began to struggle with compromises that were required from trying to maintain both biological realism and functionality.

In 2010 I started investing a lot of time into learning/relearning the fundamentals (math, probabilistic modeling, statistics, etc.) to be able to explore machine learning at a deeper level. By the end of the year, I realized I was gaining more traction as a researcher by focusing on more abstract theoretical principles, so I decided to focus less on the biological aspects of intelligence. My change of focus is described in more detail in the preface here.